How do we know the fundamental constants are constant? We don't.

Through a variety of tests on Earth and throughout the universe, physicists have measured no changes in time or space for any of the fundamental constants of nature.

Breaking space news, the latest updates on rocket launches, skywatching events and more!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Through a variety of tests on Earth and throughout the universe, physicists have measured no changes in time or space for any of the fundamental constants of nature.

All of modern physics rests on two main pillars. One is Einstein's theory of general relativity, which we use to explain the force of gravity. The other is the Standard Model, which we use to describe the other three forces of nature: electromagnetism, the strong nuclear force and the weak nuclear force. Wielding these theories, physicists can explain vast swaths of interactions throughout the universe.

But those theories do not fully explain themselves. Appearing within the equations are fundamental constants, which are numbers that we must measure independently and plug in by hand. Only with these numbers in place can we use the theories to make new predictions. General relativity depends on only two constants: the strength of gravity (commonly called G) and the cosmological constant (usually denoted by Λ, which measures the amount of energy in the vacuum of space-time).

Related: The problems with modern physics

The Standard Model requires 19 constants to plug into the equations. These include parameters such as the masses of nine fermions (like the electron and the up quark), the strengths of the nuclear forces, and constants that control how the Higgs boson interacts with other particles. Because the Standard Model does not automatically predict the masses of the neutrinos, to include all their dynamics we have to add seven more constants.

That's 28 numbers that completely determine all the physics of the known universe.

Not so constant

Many physicists argue that having all these constants seems a little artificial. Our job as scientists is to explain as many varied phenomena as possible with as few starting assumptions as we can get away with. Physicists believe that general relativity and the Standard Model are not the end of the story, however, especially since these two theories are not compatible with each other. They suspect that there is some deeper, more fundamental theory that unites these two branches.

Breaking space news, the latest updates on rocket launches, skywatching events and more!

That more fundamental theory could have any number of fundamental constants associated with it. It could have the same set of 28 we see today. It could have its own, independent constants, with the 28 appearing as dynamic expressions of some underlying physics. It could even have no constants at all, with the fundamental theory able to explain itself in its entirety with nothing having to be added by hand.

No matter what, if our fundamental constants aren't really constant — if they happen to vary across time or space — then that would be a sign of physics beyond what we currently know. And by measuring those variations, we could get some clues as to a more fundamental theory.

And physicists have devised a number of experiments to test the constancy of those constants.

Constants to the test

One test involves ultraprecise atomic clocks. The operation of an atomic clock depends on the strength of the electromagnetic interaction, the mass of the electron, and the spin of the proton. Comparing clocks at different locations or observing the same clock for long periods of time can reveal if any of those constants change.

Another ingenious test involves the Oklo uranium mine in Gabon. Two billion years ago, the site acted as a natural nuclear reactor that operated for a few million years. If any of the fundamental constants were different back then, the products of that radioactive process, which survive to the present day, would be different than expected.

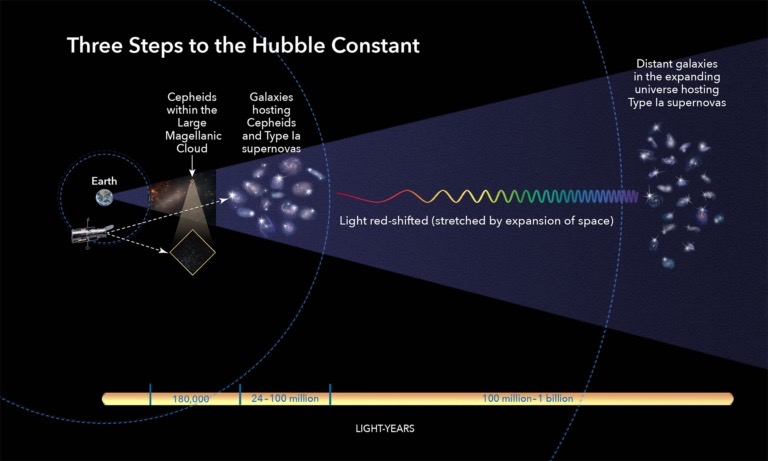

Looking at larger scales, astronomers have studied the light emitted by quasars, which are ultraluminous objects powered by black holes sitting billions of light-years away from us. The light from those quasars had to travel those enormous distances to reach us, and they passed through innumerable gas clouds that absorbed some of that light. If fundamental constants were different throughout the universe, then that absorption would be altered and quasars in one direction would look subtly different from quasars in other directions.

At the very largest scales, physicists can use the Big Bang itself as a laboratory. They can use our knowledge of nuclear physics to predict the abundance of hydrogen and helium produced in the first dozen minutes of the Big Bang. And they can use plasma physics to predict the properties of the light emitted when our universe cooled from a plasma to a neutral gas when it was 380,000 years old. If the fundamental constants were different long ago, then it would show up as a mismatch between theory and observation.

In these experiments and more, nobody has ever observed any variation in the fundamental constants. We can't completely rule it out, but we can place incredibly stringent limits on their possible changes. For example, we know that the fine structure constant, which measures the strength of the electromagnetic interaction, is the same throughout the universe to 1 part per billion.

While physicists continue to search for a new theory to replace the Standard Model and general relativity, it appears that the constants we know and love are here to stay.

Follow us on Twitter @Spacedotcom or on Facebook.

Paul M. Sutter is a cosmologist at Johns Hopkins University, host of Ask a Spaceman, and author of How to Die in Space.