Dark energy remains a mystery. Maybe AI can help crack the code

"If you wanted to get this level of precision and understanding of dark energy without AI, you'd have to collect the same data three more times in different patches of the sky."

Breaking space news, the latest updates on rocket launches, skywatching events and more!

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Delivered daily

Daily Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Once a month

Watch This Space

Sign up to our monthly entertainment newsletter to keep up with all our coverage of the latest sci-fi and space movies, tv shows, games and books.

Once a week

Night Sky This Week

Discover this week's must-see night sky events, moon phases, and stunning astrophotos. Sign up for our skywatching newsletter and explore the universe with us!

Twice a month

Strange New Words

Space.com's Sci-Fi Reader's Club. Read a sci-fi short story every month and join a virtual community of fellow science fiction fans!

As humans struggle to understand dark energy, the mysterious force driving the universe's accelerated expansion, scientists have started to wonder something rather futuristic. Can computers can do any better? Well, initial results from a team that used artificial intelligence (AI) techniques to infer the influence of dark energy with unmatched precision may suggest an answer: Yes.

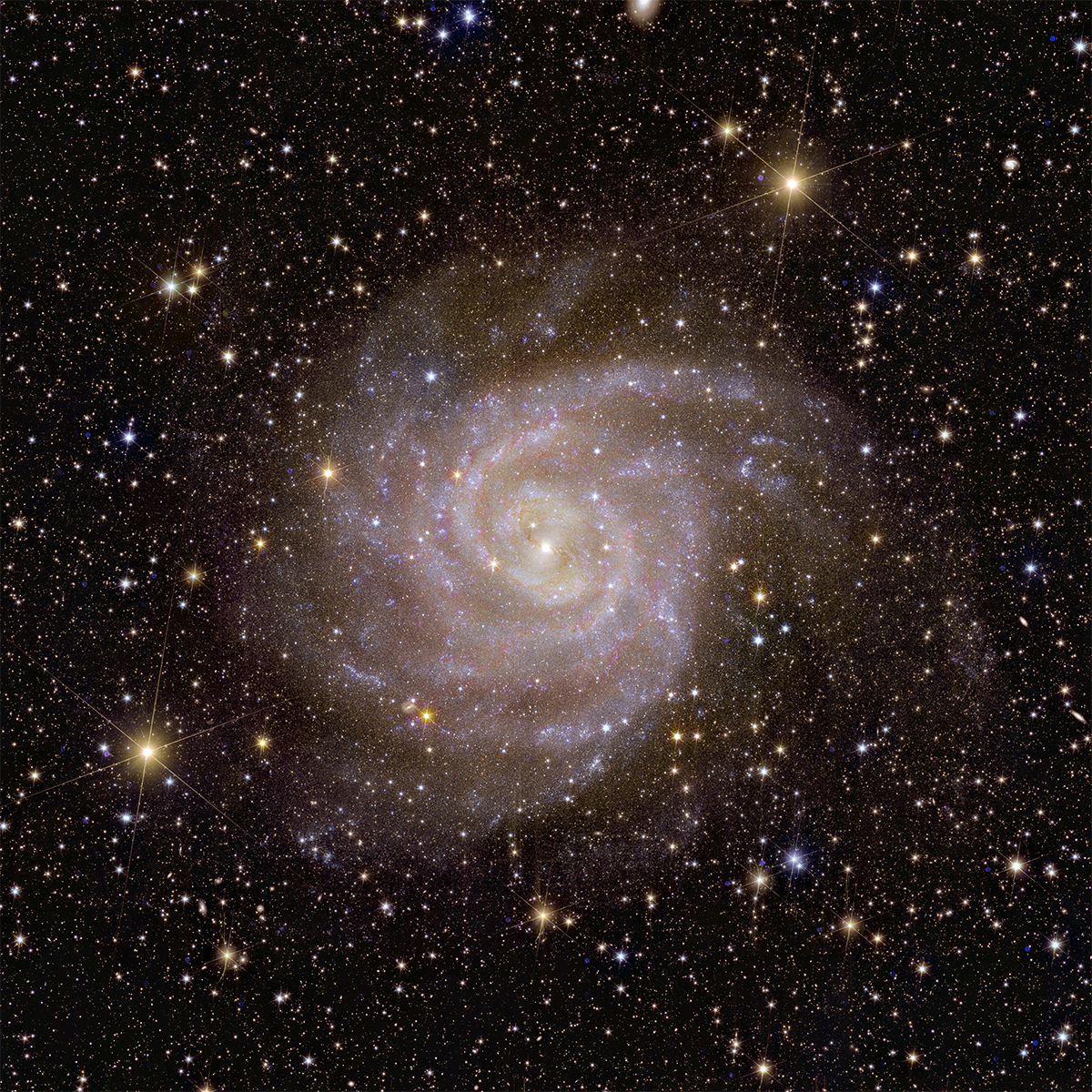

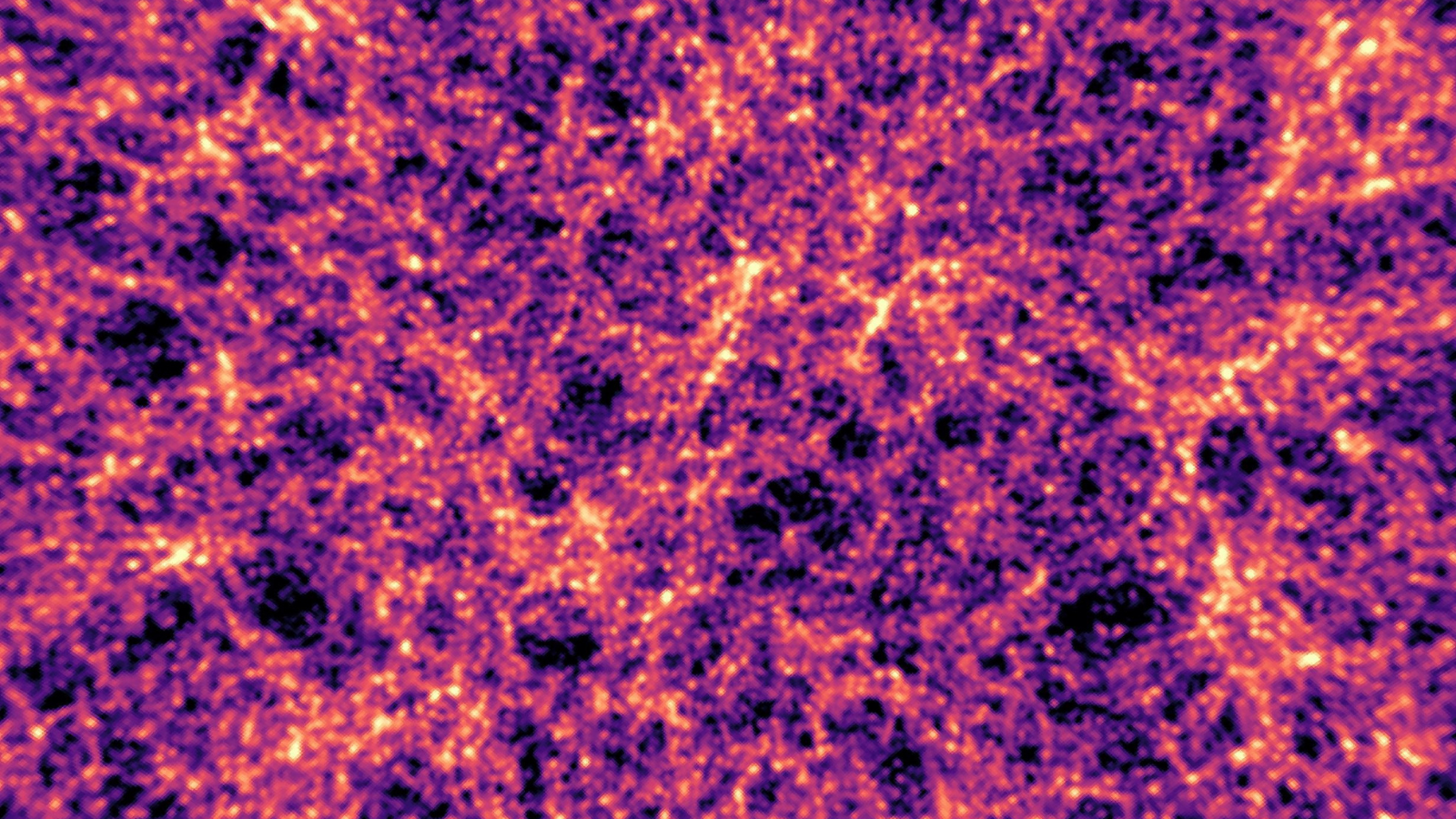

The team, led by University College London scientist Niall Jeffrey, worked with the Dark Energy Survey collaboration to use measurements of visible matter and dark matter to create a supercomputer simulation of the universe. While dark energy helps push the universe outward in all directions, dark matter is a mysterious form of matter that remains invisible because it doesn't interact with light.

After creating the cosmic simulation, the crew then employed AI to pluck out a precise map of the universe that covers the last seven billion years and showcases the actions of dark energy. The team's resultant data represents a staggering 100 million galaxies across around 25% of Earth's Southern Hemisphere sky. Without AI, creating such a map using this data, which represents the first three years of observations from the Dark Energy Survey, would have required vastly more observations. The findings help validate which models of cosmic evolution are viable when combined with dark energy dynamics, while ruling out some others that may not be.

"Compared to using old-fashioned methods for learning about dark energy from these data maps, using this AI approach ended up doubling our precision in measuring dark energy," Jeffrey told Space.com. "You'd need four times as much data using the standard method.

Related: 'Axion stars' that went boom after the Big Bang could shed light on dark matter

"If you wanted to get this level of precision and understanding of dark energy without AI," Jeffrey added, "you'd have to collect the same data three more times in different patches of the sky. This would be equivalent to mapping another 300 million galaxies."

The problem with dark energy

Dark energy is sort of a placeholder name for the mysterious force that speeds up the expansion of the universe, pushing distant galaxies away from the Milky Way, and from each other, faster and faster over time.

Breaking space news, the latest updates on rocket launches, skywatching events and more!

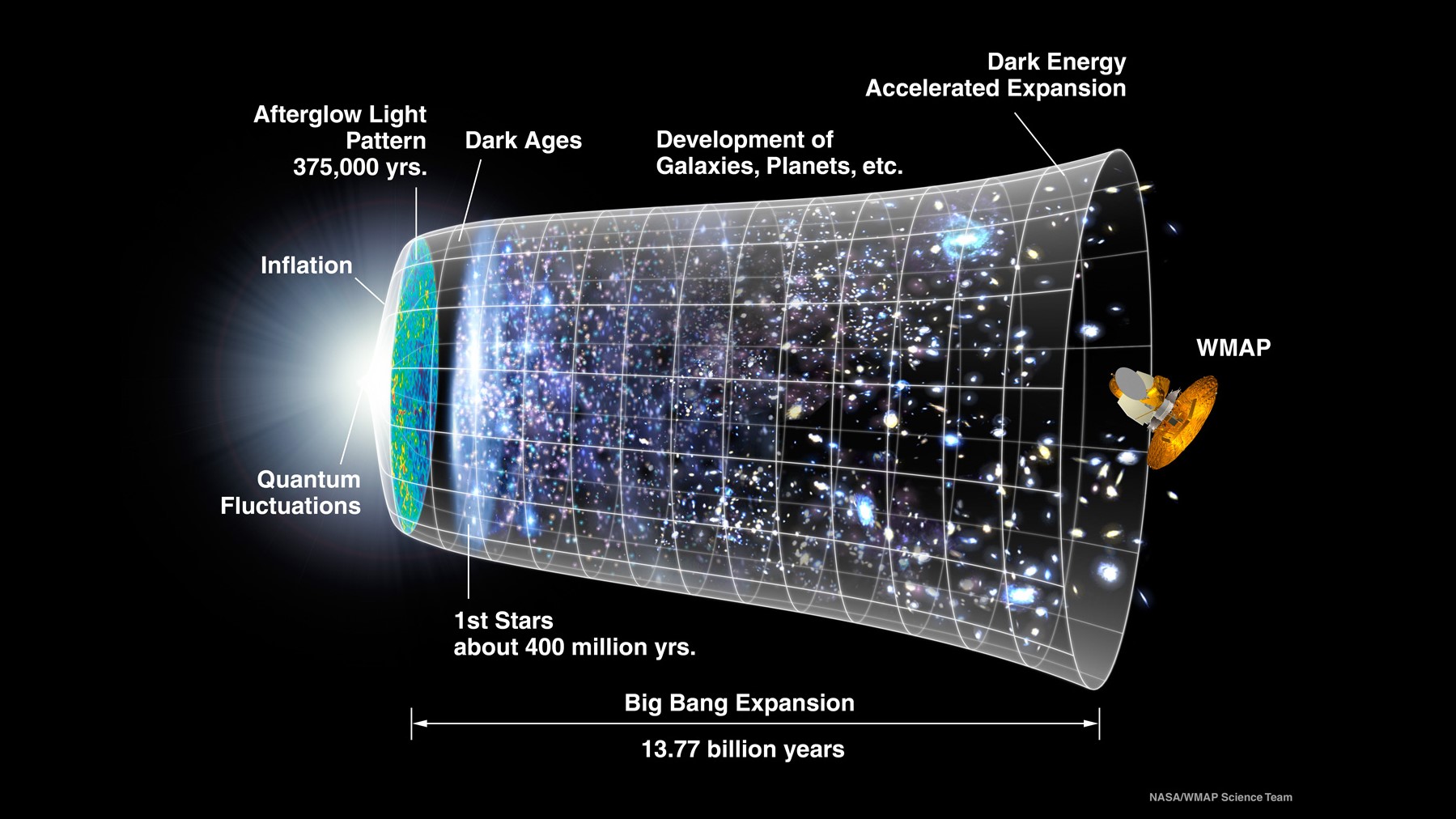

The present period of "cosmic inflation" is separate from that which followed the birth of the universe after the Big Bang; it seems to have kicked in after that initial expansion slowed to a halt.

Imagine giving a child a single push on a swing. The swing slows after that initial force is added, but instead of coming to a halt, without you pushing again, the swing suddenly begins moving once more. That would be quite strange in itself, but there's actually more. The swing would also start accelerating after suddenly restarting movement, reaching ever-increasing heights and speeds. This is similar to what's going on in space, with the universe's bubbling outward in place of a swing moving back and forth.

You would probably be pretty eager to understand what added that extra "push" and caused the acceleration. Scientists feel the same about whatever dark energy is, and how it appears to have added an extra cosmic push on the very fabric of space.

This desire is compounded by the fact that dark energy accounts for around 70% of the universe's energy and matter budget, even though we don't know what it is. When factoring in dark matter, which accounts for 25% of this budget and can't be made of atoms we're familiar with — those that make up stars, planets, moons, neutron stars, our bodies and next door's cat — we only really have visible access to about 5% of the whole universe.

"We really don't understand what dark energy is; it's one of those weird things. It's just a word that we use to describe a kind of extra force in the universe that's pushing everything away from each other as the universe's expansion continues to accelerate," Jeffery said. "The Dark Energy Survey is trying to understand what dark energy is. The main thing we're trying to do is ask the question: Is it a cosmological constant?"

The cosmological constant, represented by the Greek letter lambda, has quite a storied history for cosmologists. Albert Einstein first introduced it to assure the equations of his revolutionary 1915 theory of gravity, general relativity, supported what's known as a "static universe."

This concept was challenged, however, when observations of distant galaxies made by Edwin Hubble showed that the universe is expanding and is thus not static. Einstein threw the cosmological constant into the scientific dustbin, allegedly describing it as his "greatest blunder."

In 1998, however, two separate teams of astronomers observed distant supernovas to discover that not only was the universe expanding, but it seemed to be doing so at an accelerating rate. Dark energy was invented as an explanation for the force behind this acceleration, and the cosmological constant was fished out of the hypothetical dustbin.

Now, the cosmological constant lambda represents the background vacuum energy of the universe, acting almost like an "anti-gravity" force driving its expansion. As of now, the cosmological constant is the leading evidence for dark energy.

"Our results, compared to the use of standard methods with this same dark matter map, are tightly honed down, and we've found that this is still consistent with dark energy being explained by a cosmological constant," Jeffrey said. "So we've excluded some physical models of dark energy with this result."

This doesn't mean that the mysteries of dark energy — or the headache that the cosmological constant represents — is relieved, however.

'The worst prediction in the history of physics'

The cosmological constant still poses a massive problem for scientists.

That's because observations of distant, receding celestial objects suggest a lambda value 120 orders of magnitude (10 followed by 119 zeroes) smaller than what's predicted by quantum physics. It is thus for good reason that the cosmological constant has been described as "the worst theoretical prediction in the history of physics" by some scientists.

Jeffrey is clear: As happy as the team is with these results, this research can't yet explain the massive gulf between theory and observation.

"That disparity is just too big, and it tells us that our quantum mechanical theory is wrong," he continued. "What these results can tell us is what kind of equations or what kind of physical models describe the way our universe expands and how gravity works, pulling everything composed of matter in the universe together."

Also, while the team's results suggest general relativity is the right recipe for gravity, it can't rule out other potential gravity models that could explain the observed effects of dark energy.

"On the face of it, just looking at these results alone are consistent with general relativity — but still, there's lots of wiggle room because it also allows for other theories of how dark energy or gravity work as well," Jeffrey said.

This research demonstrates the utility of using AI to assess simulated models of the universe, pick out important patterns that humans may miss, and thus hunt for important dark energy clues.

"Using these techniques, we can get results as if we had got that data three more times — that's quite amazing," Jeffrey said.

The UCL researcher points out that it will take a very specific form of AI that is well-trained in spotting patterns in the universe to perform these studies. Cosmologists won't be able to just feed universe-based simulations to their AI systems like one may plug questions into ChatGPT and expect results.

"The problem with ChatGPT is that if it doesn't know something, it will just make it up," he said. "What we want to know is when we know something and when we don't know something. So I think there's still a lot of growth needed so that people interested in work combining science and AI can get reliable results."

Another six years of data are still to come from the Dark Energy Survey, which, combined with observations from the Euclid telescope launched in July 2023, should provide much more information about the universe's large-scale structures. This should help scientists refine their cosmological models and create even more precise simulations of the universe, which could finally lead them to answers regarding the dark energy puzzle.

"It means that the simulated universes we generate are so realistic; in some sense, they can be more realistic than what we've been able to do with our old-fashioned methods," Jeffrey concluded. "It is not just about precision, but believing in these results and thinking they're reliable."

The team's research is available as a preprint on the paper repository arXiv.

Robert Lea is a science journalist in the U.K. whose articles have been published in Physics World, New Scientist, Astronomy Magazine, All About Space, Newsweek and ZME Science. He also writes about science communication for Elsevier and the European Journal of Physics. Rob holds a bachelor of science degree in physics and astronomy from the U.K.’s Open University. Follow him on Twitter @sciencef1rst.