Science Fiction’s Robotics Laws Need Reality Check

Artificial intelligence researchers often idealize Isaac Asimov's Three Laws of Robotics as the signpost for robot-human interaction. But some robotics experts say that the concept could use a practical makeover to recognize the current limitations of robots.

Self-aware robots that inhabit Asimov's stories and others such as "2001: A Space Odyssey" and "Battlestar Galactica" remain in the distant future. Today's robots still lack any sort of real autonomy to make their own decisions or adapt intelligently to new environments.

But danger can arise when humans push robots beyond their current limits of decision-making, experts warn. That can lead to mistakes and even tragedies involving robots on factory floors and in military operations, when humans forget that all legal and ethical responsibility still rests on the shoulders of homosapiens.

"The fascination with robots has led some people to try retreating from responsibility for difficult decisions, with potentially bad consequences," said David Woods, a systems engineer at Ohio State University.

Woods and a fellow researcher proposed revising the Three Laws to emphasize human responsibility over robots. They also suggested that Earth-bound robot handlers could take a hint from NASA when it comes to robot-human interaction.

Updating Asimov

Asimov's three laws of robotics are set in a future when robots can think and act for themselves. The first law prohibits robots from injuring humans or allowing humans to come to harm due to inaction, while the second law requires robots to obey human orders except those which conflict with the first law. A third law requires robots to protect their own existence, except when doing so conflicts with the first two laws.

South Korea has used those "laws" as a guide for its Robot Ethics Charter, but Woods and his colleagues thought they lacked some vital points.

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Woods worked with Robin Murphy, a rescue robotics expert at Texas A&M University, to create three laws that recognize humans as the intelligent, responsible adults in the robot-human relationship. Their first law says that humans may not deploy robots without a work system that meets the highest legal and professional standards of safety and ethics. A second revised law requires robots to respond to humans as appropriate for their roles, and assumes that robots are designed to respond to certain orders from a limited number of humans.

The third revised law proposes that robots have enough autonomy to protect their own existence, as long as such protection does not conflict with the first two laws and allows for smooth transfer of control between human and robot. That means a Mars rover should automatically know not to drive off a cliff, unless human operators specifically tell it to do so.

Woods and Murphy see such revisions as necessary when robotics manufacturers do not recognize the human responsibility for robots. Murphy said that such attitudes come from a computer software culture, where liability carries less consequence than creating a machine which ends up injuring humans or damaging property.

"What happens is that we're seeing roboticists who have never done any manufacturing or work in the physical world, and don't realize that they're responsible," Murphy told SPACE.com. "At the end of the day, if you make something it's your problem."

She contrasted that attitude with NASA's "culture of safety" and methodical approach that carefully tests robotic probes and rovers, recognizes the limits of robots, and tries to ensure that human operators can quickly jump into the driver's seat when necessary.

NASA's way

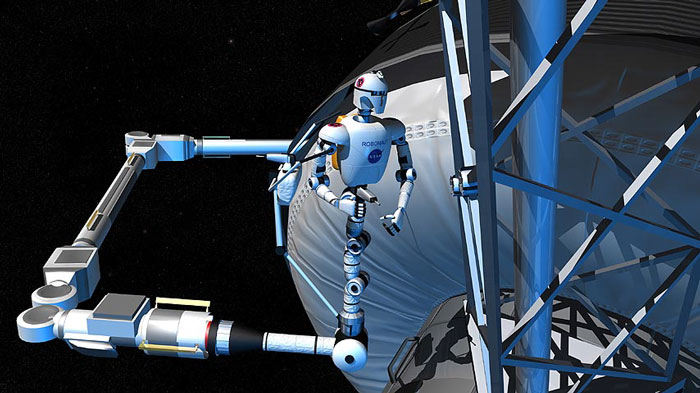

Both Woods and Murphy said they felt very comfortable with NASA's approach to robot-human interactions, whether it involves the robotic arms on the International Space Station or the Cassini probe making its ongoing tour of the Saturn system.

"They understand how valued a resource these robots are," Woods explained. "They know there will be surprises in space exploration."

Murphy pointed out that NASA's approach to robots comes from the AI tradition where people don't make assumptions about having perfect control over everything. Instead, the space agency has used AI systems that can perform well within normal operating patterns, and can still lean on human assistance for more uncertain situations.

This approach has worked well for NASA in many cases. For instance, scientists have done skillful troubleshooting with the Mars rovers Spirit and Opportunity, when the robots encounter unpredictable issues with the Martian terrain and climate.

By contrast, some researchers without an AI background assume that they can program robots that have a complete model or view of the world, and can behave accordingly for any given situation. This coincides with the temptation to assume that giving robots more autonomy means that they can handle most or all situations on their own.

Murphy cautioned that such a "closed world" approach only works with certain situations that present limited options for an AI. No matter how much programming goes into robots, the real open world represents a trap-filled pit of unexpected scenarios.

Transfer of control

NASA would undoubtedly still like to see robots that can take on more responsibility, especially during missions when probes travel beyond the moon or Mars. Researchers have already begun developing next-generation robots that could someday make more of the basic decisions for exploring worlds such as Europa or Titan.

But for now, NASA has recognized that robots still need human supervision — a concept that some robot manufacturers and operators on Earth have more trouble grasping. Unexpected situations such as robot malfunction or environmental surprises can quickly require humans to regain control of the robot, lest it fail to respond in the right way.

"Right now, what we see over and over again is transfer of control being an issue," Murphy noted. She observed how human operators can quickly run into trouble when maneuvering robots and drones around Texas A&M's "Disaster City," a training simulation area for rescuers. Part of the problem also arises when people do not know the capabilities of their robots.

NASA has some luxury in having a team of scientists watch its robotic explorers, rather than single human operators. But mission controllers also maintain high awareness of the limits of their robotic explorers, and understand how to smoothly take over control.

"They understand that when they have a safe or low-risk envelope, they can delegate more autonomy to the robot," Woods said. "When unusual things happen, they restrict the envelope of autonomy."

Woods compared the situation to parents setting up a safe perimeter within which kids can wander and explore. That analogy may continue to serve both NASA and other would-be robot handlers.

"People are making this leap of faith that robot autonomy will grow and solve our problems," Woods added. "But there's not a lot of evidence that autonomy by itself will make these hard, high-risk decisions go away."

Jeremy Hsu is science writer based in New York City whose work has appeared in Scientific American, Discovery Magazine, Backchannel, Wired.com and IEEE Spectrum, among others. He joined the Space.com and Live Science teams in 2010 as a Senior Writer and is currently the Editor-in-Chief of Indicate Media. Jeremy studied history and sociology of science at the University of Pennsylvania, and earned a master's degree in journalism from the NYU Science, Health and Environmental Reporting Program. You can find Jeremy's latest project on Twitter.