To Visit Distant Planets, Spacecraft May Need Better Computer Brains

Before robotic probes can land on alien worlds far from human influence and perform other ambitious deep-space feats, their brains will need to level up.

The field of deep learning — which has computers learn to recognize patterns based on training data — has been too risky to use much for spacecraft decision-making. But that may change as missions grow more complicated and the cost of launching small spacecraft decreases, said Ossi Saarela, space segment manager at the computation software company MathWorks.

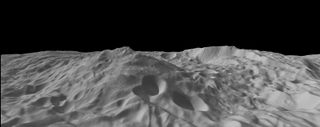

"For things like landings on planets, asteroids [and] comets, the first big problem is just reaching those — the amount of precision you need for navigation is pretty spectacular, when you think about it, considering how far away those objects are and how small they can be," Saarela told Space.com. "One other challenge, of course, with the asteroid and comets and planets in particular, is oftentimes we don't even really know what they look like until we get there. So, that's a challenge that has to be solved if we're going to go fly around them and in particular if we're going to attempt a landing or a sample return." [Missions to Mars: A Red Planet Robot History (Infographic)]

Right now, NASA's Opportunity rover on Mars is apparently in an autonomous power-saving mode, in which it checks periodically whether the ongoing massive Martian dust storm has cleared enough for the rover to recharge its batteries using solar power and then communicate with Earth. Opportunity, like most rovers and spacecraft, can make its own decisions in the short term by following very specific algorithms, programmed explicitly and rigorously tested on Earth. To guide the spacecraft through something complicated in advance, like locating a particular feature, programmers would have to very precisely describe that feature, and any possible variation, for the rover to recognize in its travels.

Most spacecraft can make minor autonomous decisions on their own — noticing they're veering off course by watching the stars, for instance, and adjusting to get back on track — but the trajectory itself is uploaded from the ground. Similarly, spacecraft can shut down a malfunctioning part, but troubleshooting and rebooting are left to faraway human experts.

But researchers can't account for every contingency when arriving at a new asteroid or planet, as above, or docking with another spacecraft — a situation Saarela likened to teaching a spacecraft how to go through a door.

"We, as humans, are very good at seeing something and being able to tell what it is — in other words, to classify it," Saarela said. "If we need to go through the door, we know from experience that it's a door and how to walk through it. But if you try to formulate that problem to a machine and write it up in code, it actually becomes a very, very complicated problem: You have to explain in code what a door means, so you probably break it down into something like edges and openings and things like that, and it becomes a lot more complicated than you would imagine."

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

Using a deep learning process, however, a computer can learn how to recognize features on its own from training data. For instance, researchers might show a computer a lot of pictures, some containing cats, so it can identify when pictures have cats in them. This process is used for computer vision and speech recognition, and in astronomy, it has been used to pinpoint exoplanets and search for evidence of gravitational waves, as well as to analyze sky-distorting gravitational lenses. But human programmers can't peek in and see what features the computers are using in their analyses — leading, for instance, to an image-recognition algorithm identifying sheep only when there is also a green field.

"When you apply deep learning, you don't really control the set of features that are pulled out by the algorithms, and those features might not be the same features that a human being would pull out of the algorithm," Saarela said. "It's difficult to understand the behavior, and because it's difficult to understand the behavior, there is this hesitation to use it."

It's not an uncertainty people want when launching multimillion-dollar spacecraft on groundbreaking missions. [Shape-Shifting Robots on Titan and Other Wild NASA Tech Ideas]

"Typically, when spacecraft algorithms are designed, they go through a very rigorous verification/validation process that's highly deterministic in nature," Saarela said. "Engineers in the field are used to having a high level of confidence in predicting the output of their algorithm given a specific input, and even though machine learning algorithms produce the same output if you give them the exact same input, if that input changes a little bit, then the output becomes more variable or more unpredictable than what the industry is used to."

Finding a way to test enough situations to be confident in the network's decisions is one key challenge, and finding enough high-quality training data — like processed images of real locations or spacecraft, or maybe specially generated data someday — is another.

But researchers at private companies and agencies like NASA are beginning to consider the use of machine learning and are looking into sharing training data sets, Saarela said. MathWorks' products MATLAB and Simulink are already used for spacecraft guidance, navigation and control algorithms. So, Saarela said, adding machine learning capabilities provided by MATLAB — and deep learning, which is a subset of those capabilities — is a natural extension.

And he said he expects this approach to grow more common as missions become more complex and launches less expensive.

"I would expect the proofs of concept to come from either startups or projects that are lower-cost, projects that are willing to tolerate higher risk," Saarela said. "And I think that the reason that we're going to start seeing it is just because people won't be able to resist the performance gains that they can achieve. And once you have a low-cost-enough mission, the risk becomes worth it."

The increasing capabilities of mini-satellite platforms like cubesats — some of which have even gone to deep space — are a key example of that lowering cost.

"Another thing is, there is this general trend towards even the big-science missions becoming more and more complicated and more and more ambitious, and I do think that in order to achieve some of these science goals, you might start seeing machine learning algorithms being deployed — maybe not on the primary controller on a spacecraft that's responsible for the safety of the spacecraft itself, but maybe on some of the smaller science experiments and payloads," he added.

The technology is on the cusp of being worthwhile for these projects, Saarela said. Soon, a well-trained spacecraft up close to an object in space may have an advantage over a faraway engineer, even with the risk of a misunderstanding.

"Perhaps you're flying over a planet, an asteroid, a comet, and there's a feature that would have a high either scientific interest or commercial value, but in order to get the information that you need, you have to move the spacecraft or you have to move the camera … and if you don't do it right now, you're going to miss it because you'll have flown by," Saarela said. "Unless you understand your target very, very well, you won't have that information." Machine learning processes may someday soon let a spacecraft figure it out on the fly and get that crucial measurement.

Email Sarah Lewin at slewin@space.com or follow her @SarahExplains. Follow us @Spacedotcom, Facebook and Google+. Original article on Space.com.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Sarah Lewin started writing for Space.com in June of 2015 as a Staff Writer and became Associate Editor in 2019 . Her work has been featured by Scientific American, IEEE Spectrum, Quanta Magazine, Wired, The Scientist, Science Friday and WGBH's Inside NOVA. Sarah has an MA from NYU's Science, Health and Environmental Reporting Program and an AB in mathematics from Brown University. When not writing, reading or thinking about space, Sarah enjoys musical theatre and mathematical papercraft. She is currently Assistant News Editor at Scientific American. You can follow her on Twitter @SarahExplains.