How to Build a Smarter Mars Rover

The next mission to Mars could carry a smarter rover that is able to make better decisions absent instructions from Earth.

Engineers are looking to automate some of the simple decision-making steps undertaken by Mars rovers and orbiters, which could dramatically improve the science they are able to perform in the search for habitable environments.

"There are a lot of situations in which rovers can benefit from a little bit of extra onboard decision-making," research technologist David Thompson, of NASA's Jet Propulsion Laboratory in Pasadena, California, told Astrobiology Magazine. [Latest Mars Photos by NASA's Curiosity Rover]

Thompson is the principal investigator of the TextureCam Intelligent Camera Project, a NASA system that enhances autonomous investigations. While TextureCam will allow the rover or satellite to better prioritize the data it sends to Earth, Thompson stressed that it isn't meant to take the place of human scientists.

"We're not trying to do scientific interpretations," he said. "The rover can never replace the scientist on the ground, at least not for many decades."

A richer palette

Mars rovers — and, to a lesser degree, orbiters — face an important challenge when it comes to sending data back to Earth.

Thompson described the most common methods for a rover to work as follows: Scientists notice an interesting feature — perhaps from orbit, perhaps on the rover's camera — and the rover is directed to approach the area. The rover sends back preliminary images so scientists can determine an appropriate spot to drill, or perform the necessary science. Another command cycle or two passes while the rover deploys its instruments.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

The entire process takes several command cycles — about once every six hours on Mars, depending on the location — to get the rover set up. By reducing that time, the rover can accomplish more science.

"The more you can include the rover, the richer palette you have to give commands," Thompson said.

Virginia Gulick, of the SETI (Search for Extraterrestrial Intelligence) Institute and NASA's Ames Research Center in Silicon Valley, agreed.

"If automated image analysis is combined with other data, such as mineralogical data from spectrometer, and integrated into a system of science analysis algorithms, the resulting information can be very helpful," she said.

Gulick, a planetary geologist, wasn't involved in the development of TextureCam. She leads a team working to develop autonomous mineral and rock classifiers for future robotic missions. The results of their automated mineral classifier were published in a 2013 paper in the journal Computers and Geosciencesby Sascha, Ishikawa and Gulick. [Mars Explored: Landers and Rovers Since 1971 (Infographic)]

Preliminary results for the rock classifier portion of the program was presented by California Polytechnic State University student Luis Valenzuela at the 46th Lunar and Planetary Science Conference in Texas in March. Valenzuela worked on the project as a SETI Institute summer intern under the mentorship of Gulick and research assistant Patrick Freeman, who is also part of Gulick’s team.

TextureCam automates simple aspects of geological image analysis, such as searching for ideal veins of rockto sample or stopping to examine an interesting seam based on preprogrammed instructions without waiting for commands from Earth. Rovers are constantly moving through the Martian landscape —Curiosity travels tens or hundreds of meters every day — and almost never backtracks.

"You can pass through terrain that you'll never see again," Thompson said.

Giving the rover the ability to recognize ideal material in its path to investigate can eliminate some missed opportunities. Thompson compared the process to telling a kindergartner to find a rock with layers; the child can't perform the necessary science, but he or she can figure out where to look with simple instructions.

Thompson emphasized that rovers such as Curiosity don't spend a lot of time sitting around; scientists and engineers find ways to fill the dead time. Including the rover gives them more flexibility in how that time is spent.

Similarly, Gulick's rock and layer detectors could be combined with other science analysis programs, such as the automated mineral classifier her team developed, to make a rover function more independently, and thus more efficiently.

"We could autonomously locate layers, rocks, analyze the spectra and images and also return compositional, textural and color information," Gulick said.

TextureCam and Gulick's automated science analysis algorithms — informally known as the Geologist’s Field Assistant project — can help improve the transmission of data

"On current missions, all data is sent back to Earth and analyzed by scientists," Gulick said. "This creates a bottleneck for science exploration, because returned data is limited by downlink volume and the number of command cycles per day."

As a result, not all of the data collected is sent back to Earth.

"You always collect more data than you can transmit," Thompson said.

Today, most rovers send back thumbnail-sized images. Scientists on Earth then decide which full-size images they want downloaded first; the appropriate commands are sent, and the data is transmitted. Allowing the rover to use the image content to determine what to send back first can help eliminate some of the back-and-forth, ultimately permitting more information to return to Earth. Thompson emphasized that the unsent images aren't lost, and can always be accessed by scientists at a later date.

"If higher-level information can also be returned during these command cycles, then the science return can be dramatically improved," Gulick said. [The 6 Strangest Robots Ever Created]

The most recent test results performed by TextureCam will be submitted by Thompson for publication in the Journal of Artificial Intelligence Research. The TextureCam project is supported by the NASA Astrobiology Science and Technology Instrument Development program.

Peering through the clouds

In the Mojave Desertof southern California, Thompson put TextureCam through its paces. In the Mars-like terrain, the instrument analyzed the texture of rocks to determine if there was layering. Another robot named Zoë, run by Carnegie Mellon Universityin Pennsylvania, uses the same software to explore the Atacama Desert in Chile, making changes on the go as it comes across interesting features. Zoë has been running for the past three years.

But the ground isn't the only place the software has been put to work. Launched in 2013, the IPEX satellite still orbits Earth today, though its last transmission came in January 2015. During its mission, IPEX collected several images of the planet each minute. But the coast isn't always clear from Earth's orbit, and IPEX captured many cloud-filled images. Thanks to the TextureCam software, the components of which are available on as open-source software on GitHub, the tiny cubesat was able to prioritize cloud-free images to send to scientists on Earth.

"With half the Earth's surface covered by clouds, you can potentially double the scientific application without changing the hardware," Thompson said.

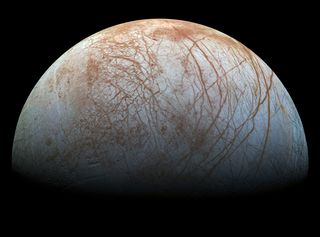

With plans in the works for a future Europa mission, a more automated rover could prove useful when it comes time to explore the surface of the icy moon of Jupiter.

"Typically, the farther away from Earth you go, the lower bandwidth you get," Thompson said.

For Europa, the time to send a command to a lander and see the results takes about 90 minutes round-trip. For a slow-moving rover, the delay would dramatically slow the pace of science. IPEX and Zoë both demonstrate the possibilities available to upcoming missions, whether to Mars, Europa, or beyond.

"We're trying to make the robot a better partner for the scientists," Thompson said. "It's just a question of identifying those stepping stones."

Much of the technology debuted in the private sector. Artificial intelligence and pattern recognition are available in many smartphones and on websites that recognize poses.

"It's totally natural to deploy those in outer space, too," Thompson said. "We don't have to re-invent the wheel."

This story was provided by Astrobiology Magazine, a web-based publication sponsored by the NASA astrobiology program. Follow Space.com @Spacedotcom, Facebook and Google+.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Nola Taylor Tillman is a contributing writer for Space.com. She loves all things space and astronomy-related, and enjoys the opportunity to learn more. She has a Bachelor’s degree in English and Astrophysics from Agnes Scott college and served as an intern at Sky & Telescope magazine. In her free time, she homeschools her four children. Follow her on Twitter at @NolaTRedd